Bimanual Categories

The Dataset in Numbers

Some facts about the extension of the Bimanual Actions Dataset.

| Subjects | 6 subjects (3 female, 3 male; 5 right-handed, 1 left-handed) |

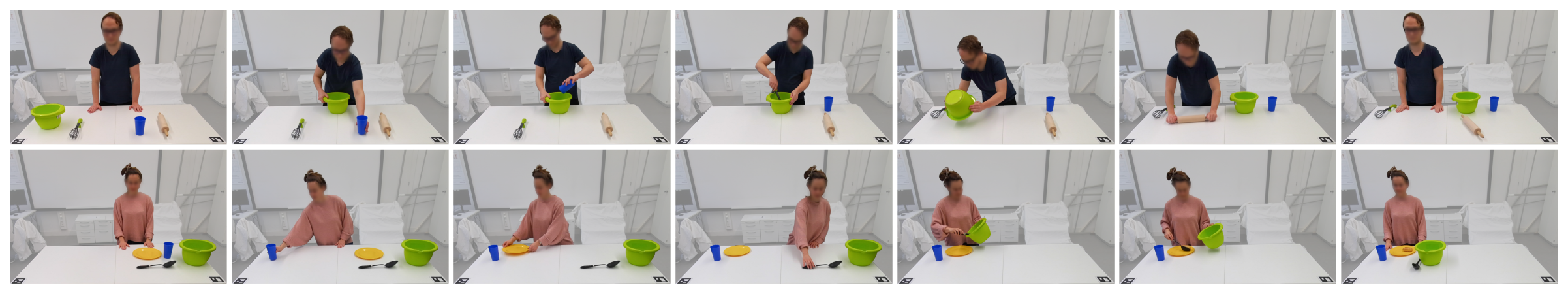

| Tasks | 2 tasks (in a kitchen context) |

| Recordings | 120 recordings in total (6 subjects performed 2 tasks with 10 repetitions) |

| Playtime | 1 hours and 2 minutes |

| Quality | 1920 px × 1080 px image resolution; 30 fps (640 px × 576 px for depth data) |

| Objects | 6 objects (bowl, cup, plate, rolling pin, spoon, whisk) |

| Annotations | Actions fully labelled for both hands individually |

Cite

If you use the Bimanual Categories Extension of the KIT Bimanual Actions Dataset, please consider citing our corresponding work.

@inproceedings{krebsleven2023,

title = {Recognition of Bimanual Manipulation Categories in RGB-D Human Demonstration},

author = {Krebs, Franziska and Leven, Leonie and Asfour, Tamim},

booktitle = {IEEE-RAS 22th International Conference on Humanoid Robots (Humanoids)},

year = {2023},

organization = {IEEE}

}

Data Download

In the following sections you can download the RGB-D dataset, derived data, and relevant documents. Clicking the icon will redirect you to the relevant part in the information page for an overview on the corresponding data format or other relevant information.

Category Ground Truth Labels

Data which was derived from the RGB-D dataset, like human pose or object bounding boxes. The data of the original KIT Bimanual Actions Dataset and the extension are combined. From the original KIT Bimanual Actions Dataset the following scenarios are included: cooking, cooking with bowls, pouring, wiping, cereals.The data from subjects with the same number is combined from both datasets. This data can be used to reproduce the results of the publication. In addition, the data is mirrored to account for underrepresentation of left-handed subjects. Each downloadable file contains the information of all subjects.

| File | Updated | Size | SHA256 hash |

|---|---|---|---|

| Training data (combined dataset + mirrored) | 13 Mar 2023 | 989.9 MiB | 19ef92e7f575f0caed47035802d3940448ed132952372587ed56ca2cf2a9ebc8 |

| Ground truth labels (all subjects and all scenarios) | 14 Mar 2023 | 322.1 KiB | f3fd58929051ca4d29ad8b82066d1f3cc30e5d56aa0516b1a12e909a80712002 |

Combined Ground Truth Labels

This contains the combined set of ground truth labels including bimanual categories and action labels for the right and left hand for all tasks including both the orginal dataset and the extension.

| File | Updated | Size | SHA256 hash |

|---|---|---|---|

| Ground truth labels (all subjects and all scenarios, categories and actions) | 30 Jul 2025 | 168.8 KiB | bf93308d8d05015bb6670b60b394f264e8a105778dd4a39fb0c72eb2593fe073 |

Extension Bimanual Actions RGB-D Dataset

The RGB-D dataset split on individual subjects and annotations. The subjects are different from the subjects of the KIT Bimanual Actions Dataset.

| File | Updated | Size | SHA256 hash |

|---|---|---|---|

| RGB-D videos part 1/6 (subject 1) | 30 Jul 2025 | 39.6 GiB | 184ec9ab9e35e7129c3047820efb95b3f7c78c93a00d5c792e49afc463b6a68a |

| RGB-D videos part 2/6 (subject 2) | 30 Jul 2025 | 51.5 GiB | computing… |

| RGB-D videos part 3/6 (subject 3) | computing… | computing… | computing… |

| RGB-D videos part 4/6 (subject 4) | computing… | computing… | computing… |

| RGB-D videos part 5/6 (subject 5) | computing… | computing… | computing… |

| RGB-D videos part 6/6 (subject 6) | computing… | computing… | computing… |

Extension Bimanual Actions Dataset, Appendix: Derived Data

Data which was derived from the RGB-D dataset, like human pose or object bounding boxes. Each downloadable file contains the information of all subjects.

| File | Updated | Size | SHA256 hash |

|---|---|---|---|

| 3D human body pose (Azure Kinect Body Tracking) | 30 Jul 2025 | 162.3 MiB | 067056d1699e4f24bf6f2975b85a9582d13056be72efa61deac95d6e2fb952d1 |

| 2D and 3D bounding boxes | 30 Jul 2025 | 191.0 MiB | f52a8c2f8971c9d77c3ba4ff51ddea180bd6abbe1a4f0bb24251ed24d5d2348f |

| 3D spatial relations | computing… | computing… | computing… |

| camera calibration | 30 Jul 2025 | 6.1 KiB | d4740eb8cbf0551c3779b7749d881e245eea05ade51cf41825281bd1438994ab |

Documents

Relevant documents for this dataset.

| File | Updated | Size | SHA256 hash |

|---|---|---|---|

| Original briefing document | computing… | computing… | computing… |

Information

Bimanual Category Label Mapping

Refer to the following table for a mapping of bimanual category IDs and their symbolic name.

| # | Action |

|---|---|

| 0 | no action |

| 1 | unimanual left |

| 2 | unimanual right |

| 3 | loosely |

| 4 | tightly symmetric |

| 5 | tightly asymmetric right dominant |

| 6 | tightly asymmetric left dominant |

RGB-D Coordinate System

In this recordings, ArUco markers are used to construct a global coordinate system. One marker is attached to each of the two frontal corners respectively. The left one defines the origin of the global coordinate system.

Ground Truth

The new recordings were recorded to provide more data for the classification of bimanual categories in the context of kitchen activities. Therefore, the new recordings and the old scenarios with the kitchen context are labeled based on these categories. The structure is similar to the other ground truth files:

{"category": [0, 0, 38, 3, 111, 5, 315, 3, 574, 5, 847, 3, 950, 0, 1014]}All even elements depict key frames. All odd elements depict the Bimanual Category ID.

TODO: List for which of the old recordings those are available

Human Body Pose Data

In contrast to the old recordings, for the new ones Azure Kinect Body Tracking is used, to extract the human body pose. Apart from the hand's bounding boxes in the 3D Object files, the raw body tracking data of the whole body is also provided in separated files. The structure of these files is as follows: Every entry of the frames array contains an array with the different bodies recognized in this frame (always one entry in our case). For this body, there are the joint positions ordered by their index (see here).

{

"bodies": [

{

"body_id": 1,

"joint_positions": [

[

96.6239242553711,

-952.4153442382813,

1994.73486328125

],

[

94.67913818359375,

-1012.8400268554688,

1946.5592041015625

],

[...]

[

22.749197006225586,

-1078.6497802734375,

1924.5601806640625

]

]

}

],

"frame_id": 0,

"num_bodies": 1,

"timestamp_usec": 51066

}

Data Mirroring

Since we need a balance between right-handed and left-handed subjects, we mirrored the newly recorded data along the x-axis. For this, the labels were changed from right to left and the other way around. In addition, the relations left of and right of were switched. The object's x-coordinates were multiplied by -1. With this, we have every subject as right-handed and left-handed one.

Additional information about the format for the 2d bounding boxes and the spatial relations can be found here.