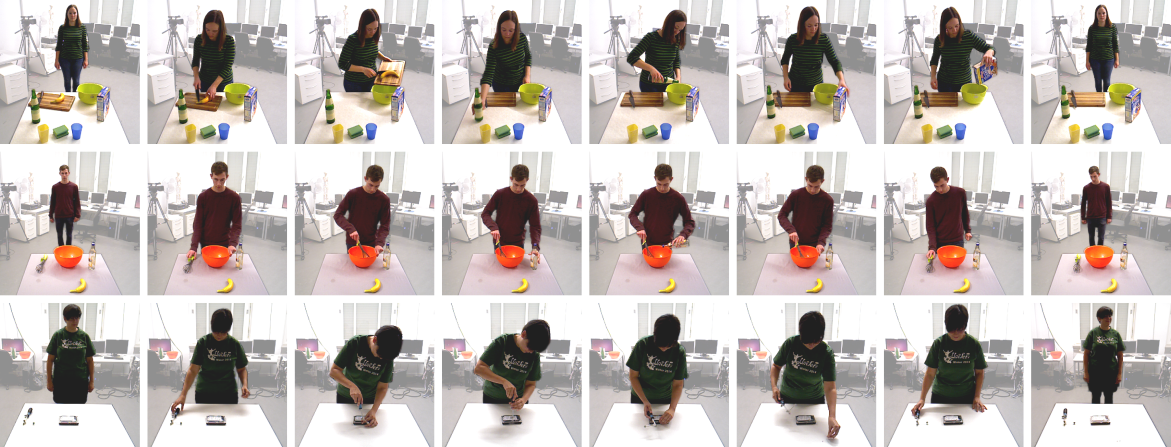

The Bimanual Actions Dataset is a collection of RGB-D videos including various extensions, showing subjects perform bimanual actions in a kitchen or workshop context. The main purpose for its compilation is to research bimanual human behaviour in order to eventually improve the capabilities of humanoid robots.

Download Original Dataset